See all blog posts in this series:

- OpenShift Virtualization on IBM Cloud, part 1: Introduction

- OpenShift Virtualization on IBM Cloud, part 2: Becoming familiar with VPC

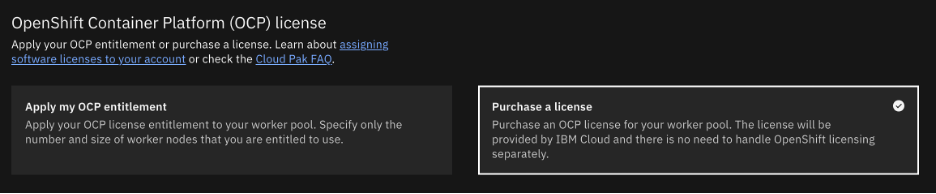

- OpenShift Virtualization on IBM Cloud, part 3: Deploying ROKS, ODF, and OCP Virt

- OpenShift Virtualization on IBM Cloud, part 4: Creating a virtual machine

- OpenShift Virtualization on IBM Cloud, part 5: Migrating a virtual machine

- OpenShift Virtualization on IBM Cloud, part 6: Backup and restore

- OpenShift Virtualization on IBM Cloud, part 7: Dynamic resource scheduling

In this post, we will install the OpenShift Migration Toolkit for Virtualization and use it to migrate a VMware virtual machine to OpenShift Virtualization.

Install the migration toolkit

In the OpenShift web UI, navigate to Operators | OperatorHub and search for “migration.” Select the “Migration Tookit for Virtualization Operator” then click “Install.” I didn’t customize any of the parameters.

Afterwards this prompted me to create a custom resource for the ForkliftController.

In time a Migration for Virtualization menu item appears in the web UI.

Preparation

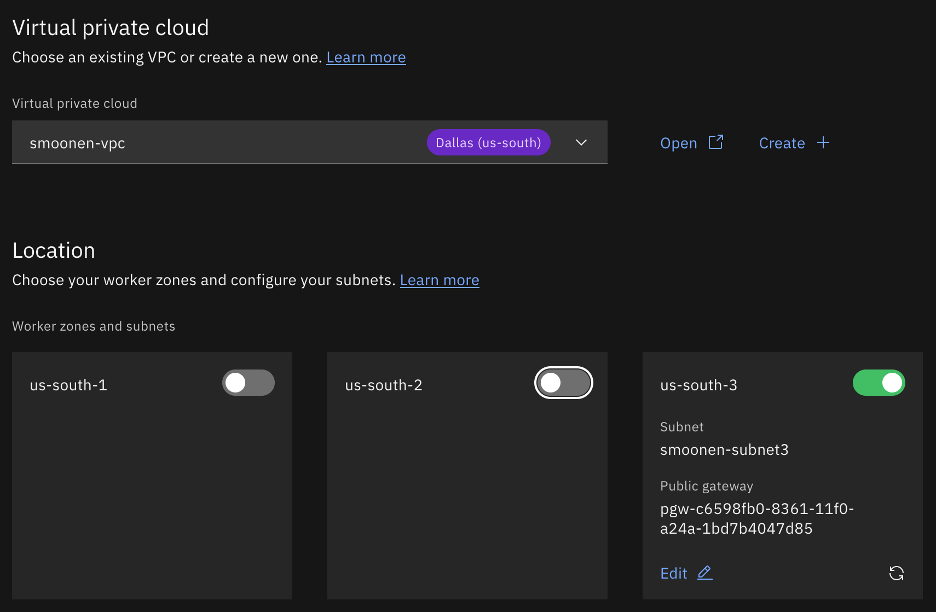

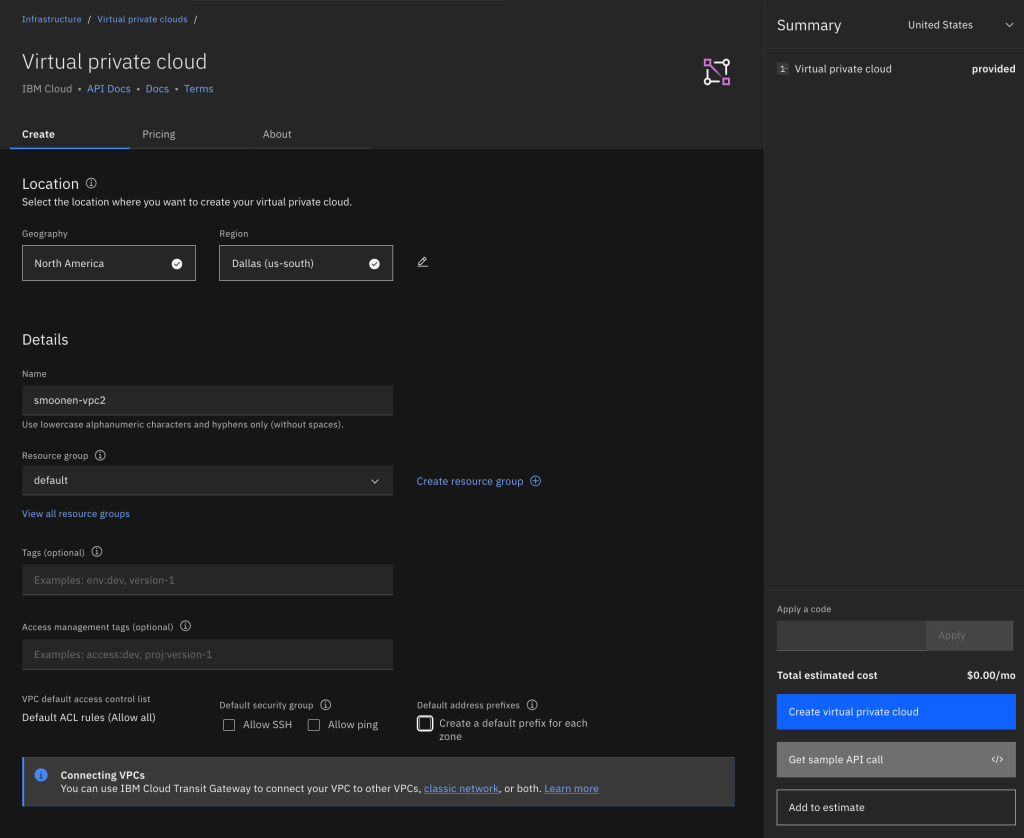

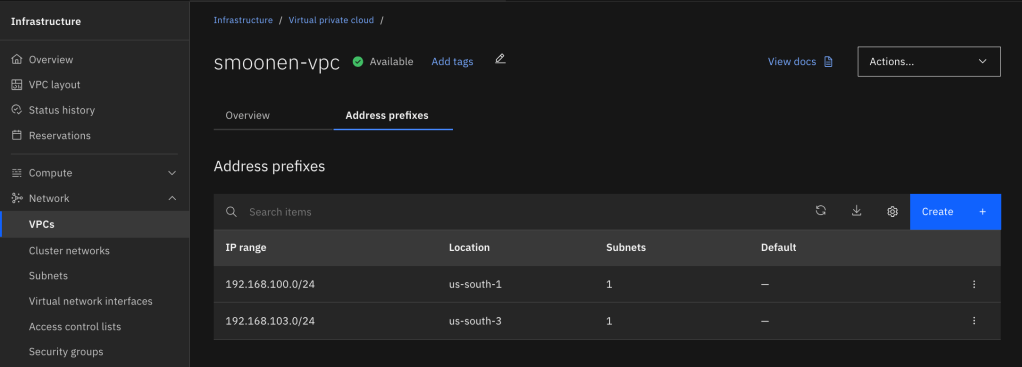

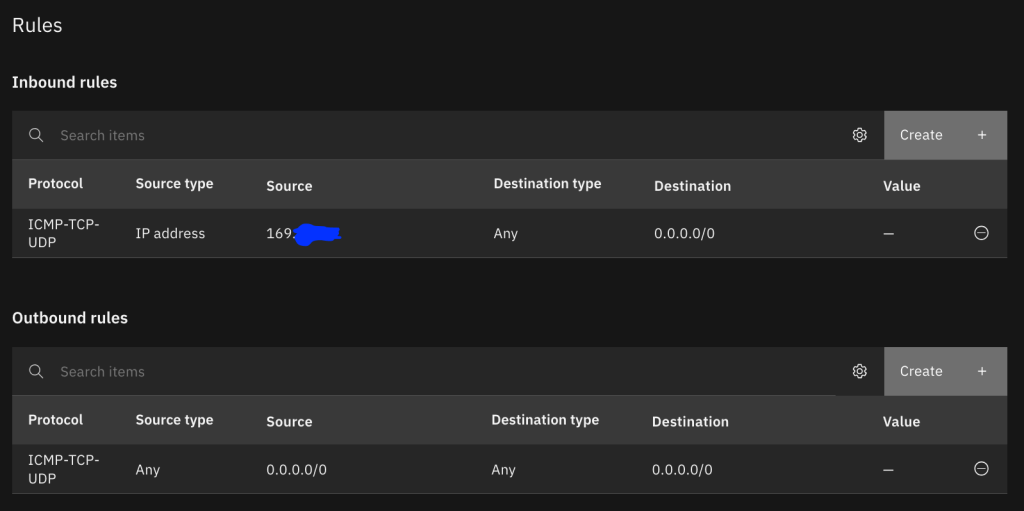

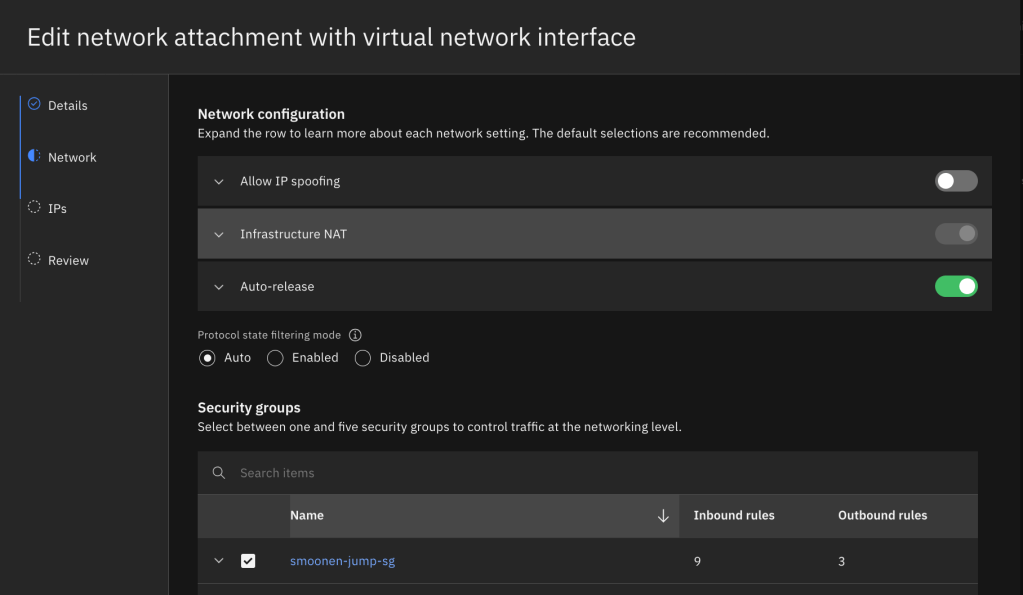

I deployed an Ubuntu VM into an overlay network in an IBM Cloud “VCS” instance (AKA “VCF on Classic Automated”) and connected my classic account to my VPC using an IBM Cloud Transit Gateway. This particular VCS instance was leveraging NFS storage.

Interestingly, VMware disables CBT by default for virtual machines. I found later in my testing that the migration provider warned me that CBT was disabled. I followed Broadcom’s instructions to manually enable it although this required me to reboot my VM.

In order to create a migration provider, RedHat recommends you create a “VDDK image.” Recent versions of the Migration operator will build this for you, and all you need to do is provide the VDDK toolkit downloaded from Broadcom. See RedHat’s instructions.

Although the migration provider is able to connect to vCenter by IP address rather than hostname, the final migration itself will attempt to connect to a vSphere host by its hostname. Therefore we need to prepare the environment to delegate the VCS instance domain to its domain controllers. I followed the RedHat instructions to configure a forwarding zone in my DNS controller. Here is the clause that I added.

servers:

- forwardPlugin:

policy: Random

upstreams:

- 10.50.200.3

- 10.50.200.4

name: vcs-resolver

zones:

- smoonen.example.com

Create the migration provider

I then went into the Providers view in the OCP web UI and created a VMware provider. Be sure to add /sdk to the end of your vCenter URL as shown below. Note also that the migration operator automatically creates a “host” provider for you, representing your OCP cluster, in the openshift-mtv project. In order to meaningfully migrate your VMs to this provider, it is best to create your VMware provider in the same project.

Create the migration plan

In the OpenShift web console I created a migration plan.

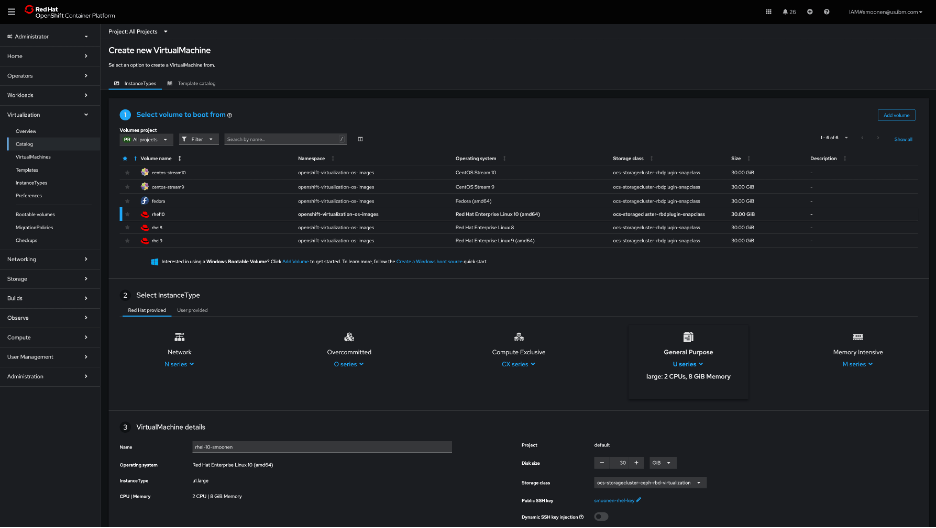

Then I selected my virtual machine.

Then I created a network mapping. The only currently supported network mapping in IBM Cloud ROKS is the pod network.

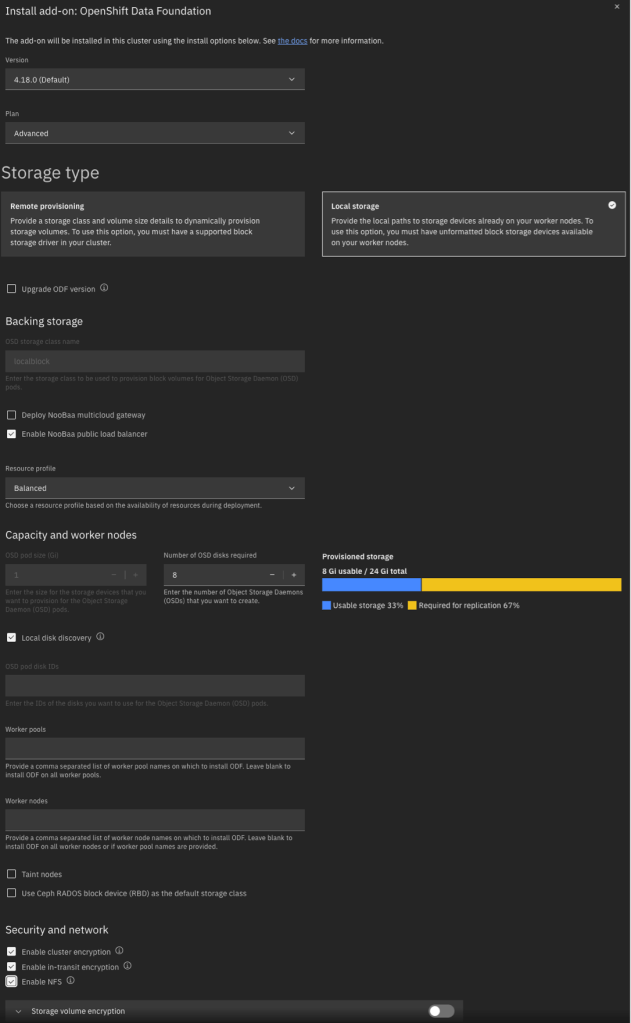

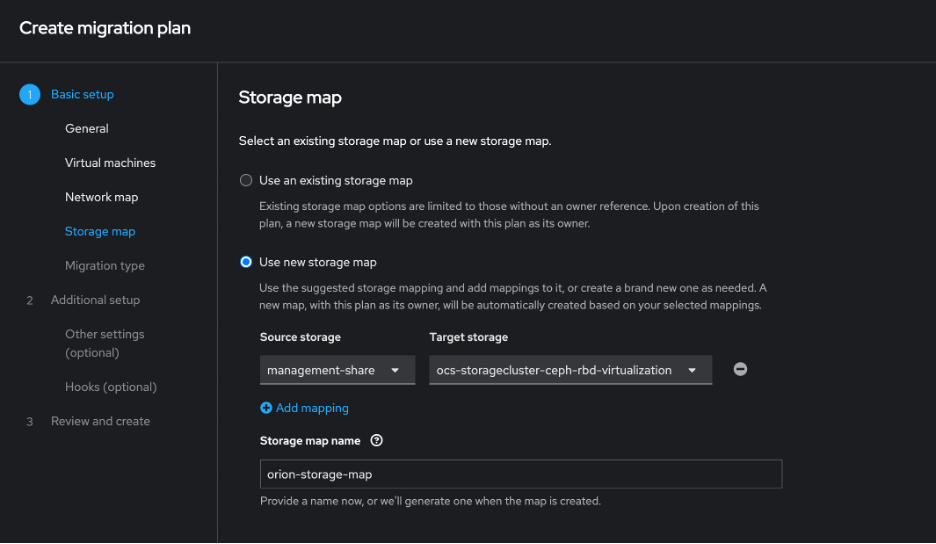

Then I created a storage mapping, being sure to select the ODF storage.

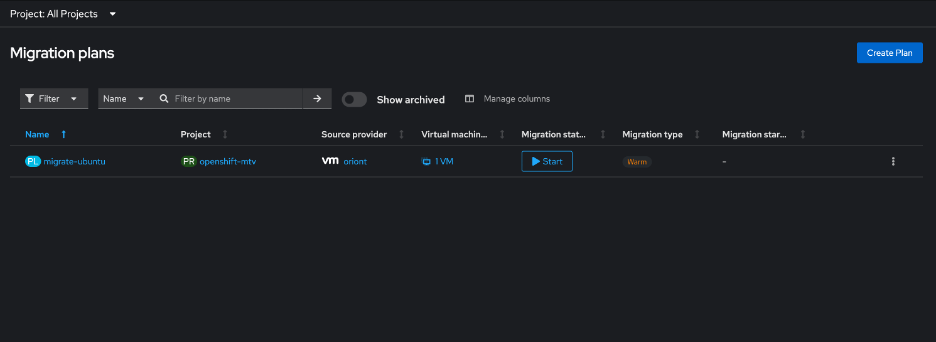

Then I chose a warm migration.

The preservation of static IPs is not currently supported in ROKS with the Calico provider.

I chose not to create migration hooks. You could use these, for example, to reconfigure the network configuration.

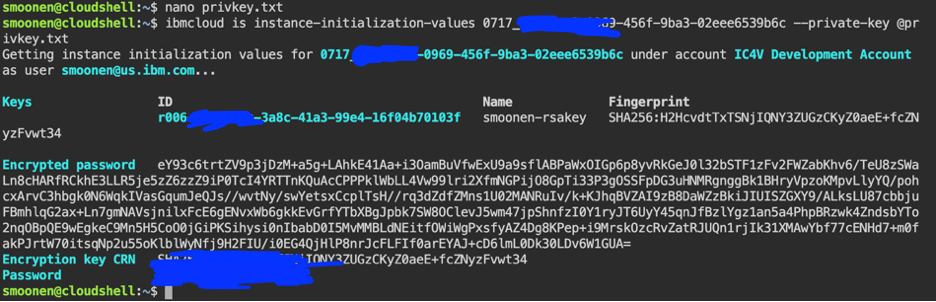

In my migration plan I chose to migrate the VM to the default project. My migration plan actually failed to initialize because it could not retrieve the VDDK image that had been built for me. Either before or after creating the migration plan, run the following command to ensure that it can access the cluster’s image registry:

oc adm policy add-cluster-role-to-user registry-viewer system:serviceaccount:default:default

Then I clicked to start the migration.

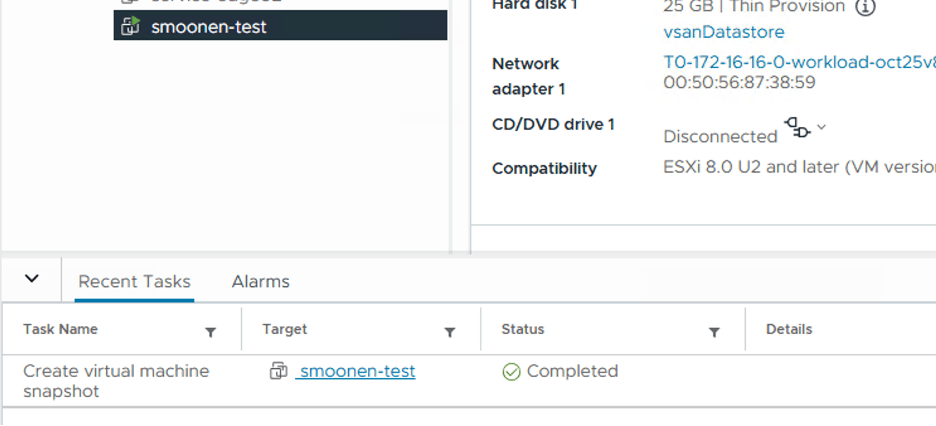

The migration created a snapshot and left my VM running.

After this completed, the VM remains running on the VMware side and is not yet instantiated on the ROKS side. The migration plan appears in a “paused” state.

Next I performed the cutover. I had a choice to run it immediately or schedule it for a future time.

The cutover resulted in the stopping of my VM on the VMware side, the removal of the snapshot, and the creation and removal of an additional snapshot; I presume this represented the replication of the remaining data as signaled by CBT.

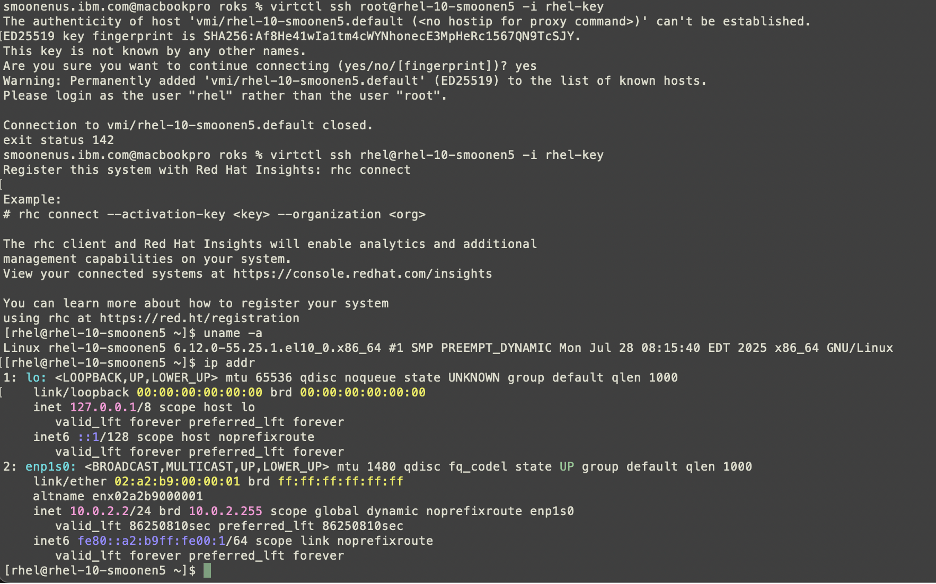

It then created and started a VM on the ROKS side.

In order to establish network connectivity for this VM, it was necessary to reconfigure its networking. The static IP must be exchanged for DHCP. In my case I also found that the device name changed.

For completeness I also installed qemu-guest-agent but it appears this is not strictly necessary. I then edited /boot/efi/loader/loader.conf to force the loading of virtio modules per Ubuntu instructions. After doing so, it appears that they are in use.

In theory, MTV should have both triggered the installation of qemu-guest-agent as well as the installation of the virtio drivers. I observed that on first boot it did attempt to install the agent, but understandably failed because the network connction was not yet established.