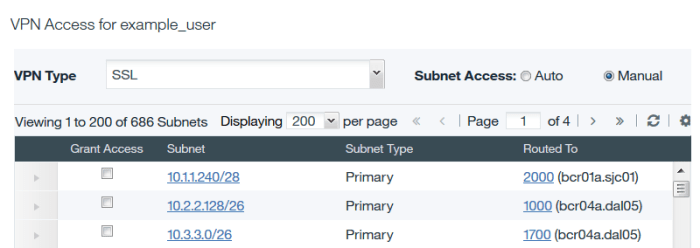

The IBM SoftLayer VPN only supports connection to 64 of your private subnets. If you have more than 64 private subnets in your SoftLayer account, you need to switch your VPN’s subnet management from Automatic to Manual, and select the specific subnets to which you want to connect.

The process for selecting subnets in the UI is not simple, especially if your account has hundreds of subnets. The subnets are not sorted, the dialog is small, and the pagination is slow.

However, it is possible to manage your VPN subnets programmatically using the SoftLayer API. I have created a Python script that allows you to manage your SoftLayer VPN subnet access. The script requires your SoftLayer username, API key, and a list of private IP addresses to which you want to connect. The script locates the subnets in your account that match your selected IP addresses, and assigns exactly these subnets to your SoftLayer VPN account.

You should wait a few minutes after running the script for it to take effect.